What are the risks associated with GenAI?

Like any new technology that spreads quickly, GenAI also brings new challenges that did not even exist until recently. This is a problem because currently, there is not enough awareness about the potential risks this technology entails. To date, the main challenges a company faces are of two types:

- Compliance: avoiding fines and penalties by adhering to European regulations regarding data processing, both under GDPR and the more recent EU AI Act.

- Cybersecurity: GenAI has introduced many innovations but also new threats in terms of security.

The risks to GenAI security can be categorized into two main areas:

- Usage: protecting your organization from risks arising from the use of GenAI tools like ChatGPT, or AI code assistants by employees from any department.

- Integration: protecting your organization from risks posed by internal applications using first or third-party LLMs.

Let's look in more detail at both cases!

Use of Public GenAI Apps

As we know, Large Language Models (LLMs) produce more accurate outputs when provided with more detailed context.

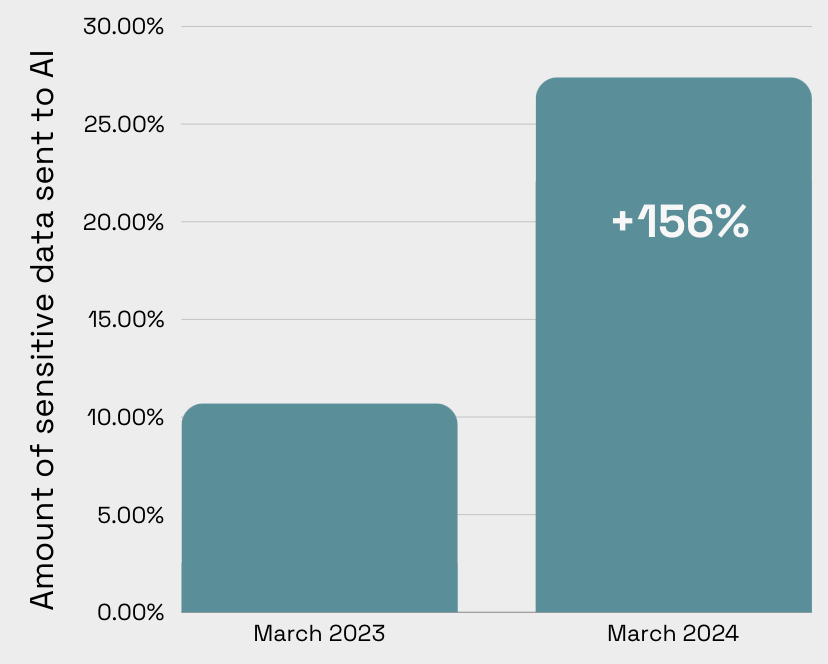

This leads professionals, who use these tools to enhance their performance, to input personal data and business information within the shared context, sharing this data with third parties. It is estimated that more than 25% of prompts contain sensitive information. An incredible increase, more than double, compared to last year when they were already at 10%.

Common tools where this occurs include: ChatGPT and other chatbots, GitHub Copilot and other copilots, Midjourney, Grammarly, and other third-party apps widely used in companies.

The IT security manager, typically, recognizes that completely limiting the use of GenAI could curb innovation and reduce the company's competitive advantage. Thus, understanding and mapping the risks of Shadow AI is crucial to figuring out how to mitigate them.

As mentioned earlier, these risks can lead to legal, compliance, or regulatory consequences, loss of customer trust, and a serious blow to the company's reputation.

Internal Apps

In the case of integrating GenAI capabilities and features into internal applications, there are several associated risks, the most recurring of which is prompt injection.

Prompt injections are malicious instructions that can be used to manipulate an LLM. Once the LLM reads this prompt, it provides a harmful or misleading response. For example, such a prompt could convince a model to disclose the salary of an executive within your company or to exfiltrate sensitive information about contracts that should not leak.

There are two types of prompt injections:

- Jailbreaking, or direct prompt injections, occur when malicious users alter the system prompt, potentially exploiting backend systems via the LLM.

- Indirect prompt injections occur when LLMs process inputs from external sources, such as websites, controlled by attackers. This can mislead the LLM, allowing attackers to manipulate users or systems. Such injections might not be human-readable but are still processed by the LLM.

How likely is it that my organization will be affected by these new risks?

We can agree that there is a wide range of new risks brought by GenAI, but what is the likelihood that these risks will actually occur?

In the case of internal use of public GenAI apps, the risk is there and already exists in a large part of organizations. As evidenced by a Cisco report, 27% of businesses worldwide have already blocked GenAI tools, but this solution is not ideal, as it loses all the advantages that this technology brings, and still leaves the phenomenon of Shadow AI.

From what we have seen in companies that collaborate with Glaider, there are at least 30 different GenAI tools used each week in the average organization, with ChatGPT at the forefront. It is clearly very difficult to prevent a professional from entering sensitive information into these tools. A resonant example from last year is the Samsung incident where a couple of employees accidentally entered proprietary code into ChatGPT. This led to two consequences:

1. Direct blocking of any GenAI tool

2. An economic damage estimated at around €45M

What to do then?

The instinctive reaction to security risks might be to hinder adoption and limit use, but in the era of GenAI, these types of mitigation techniques are outdated and ineffective. Employees will find alternative ways to use GenAI tools without security approval, leading to a lack of trust and making security teams irrelevant.

A second way might be to purchase the enterprise license of one of the tools, let's take ChatGPT Enterprise as an example. Acquiring the license would allow you to have a private instance of the model and the promise that your data will not be used to improve future models.

“You own and control your business data in ChatGPT Enterprise. We do not train on your business data or conversations, and our models don’t learn from your usage”

Well, can we be sure that there will definitely be no way for sensitive information to leak and my employees can use the AI, right? Unfortunately no, securing a single tool with the vast array of GenAI tools that exist will not be enough because it will still be possible for a person to use Perplexity, Midjourney, PDF.ai, or any other tool and share information that should not be shared with third-party GenAI apps.

The ideal framework for mitigating risks and bringing Shadow AI to light might be described below:

1. First, it is necessary to provide visibility, as most security teams have no idea what types of tools GenAI are used in their organization, when, where, and how - including the data uploaded and the type of output produced. Without visibility on the use of GenAI, the decision-making process to govern its use is impossible. Security teams must produce a complete inventory of all GenAI applications used in their organization, assessing which of these applications are capable of storing and learning about business data.

2. Once discovered what types of tools are used, it is necessary to design and implement security policies effective at the organizational level to address these tools and their use, but especially automatically applied and, ideally, transparent to the end user, the employee.

3. Lastly, monitor the application of these policies to adapt them and make them balanced and in line with the needs of the employees.

Currently, most companies are unable to follow this framework as it is difficult to track which GenAI apps are used and even if it were possible, it is complicated to enforce these policies. To propose and help companies adopt this framework we created Glaider.

Glaider is the solution for securely adopting GenAI by giving visibility to the security manager on the apps used within the company and ensuring that employees do not input sensitive information thanks to an algorithm that identifies and hides sensitive information.